This web page is a summary of

Y.Mukaigawa, S.Mihashi and T.Shakunaga,

"Photometric Image-Based Rendering for Virtual Lighting Image Synthesis",

Proc. of Second IEEE and ACM International Workshop

on Augmented Reality (IWAR'99), pp.115-124, (1999)

This page contains only experimental results. If you want to know

the details of our PIBR method, please read the proceedings of

IWAR'99.

You can get PostScript file of the full paper here.

(PostScript:1.8MB)

Photometric Image-Based Rendering for Virtual Lighting Image Synthesis

Yasuhiro MUKAIGAWA, Sadahiko MIHASHI and Takeshi SHAKUNAGA

Abstract

A concept named Photometric Image-Based Rendering (PIBR) is

introduced for a seamless augmented reality. The PIBR is

defined as Image-Based Rendering which covers appearance

changes caused by the lighting condition changes, while

Geometric Image-Based Rendering (GIBR) is defined as

Image-Based Rendering which covers appearance changes caused

by the view point changes. The PIBR can be applied to image

synthesis to keep photometric consistency between virtual

objects and real scenes in an arbitrary lighting condition.

We analyze the conventional IBR algorithms, and formalize the

PIBR in the whole IBR framework. A specific algorithm is also

presented for realizing the PIBR. The photometric

linearization makes a controllable framework for the PIBR,

which consists of four processes; (1) separation of

environmental illumination effects, (2) estimation of lighting

directions, (3) separation of specular reflections and

cast-shadows, and (4) linearization of self-shadows. After the

photometric linearization of input images, we can synthesize

any realistic images which include not only diffuse

reflections but also self-shadows, cast-shadows and specular

reflections. Experimental results show that realistic images

can be successfully synthesized with keeping photometric

consistency.

Photometric Image-Based Rendering

First, we show image syntheses of a glossy ceramic pot. Keeping a

halogen light in the long distance from the pot, we took 27 images

with changing the lighting source position. The input images are

linearized, and the principal component analysis was accomplished to

make three optimal base images. Several lighting directions

corresponding to the coefficients were specified, and virtual images

were synthesized. We show one of the synthesized images as follows.

The left is a synthesized image without linear factors. Both diffuse

reflections and self-shadows are correctly synthesized. The right is

a synthesized image with linear factors, which were selected by the

nearest neighbor method. We can see that the realistic images with

the appropriate surface properties can be synthesized by the proposed

PIBR method.

Synthesized image by the PIBR.

LEFT: Synthesized image without nonlinear factors.

RIGHT: Synthesized image with nonlinear factors.

You can see a movie sequence by clicking on each image.

(MPEG: 2MB)

Application for Augmented Reality

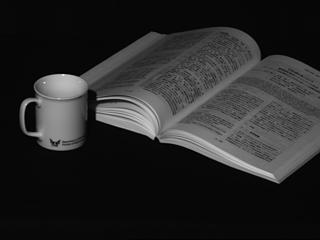

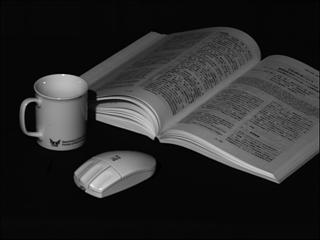

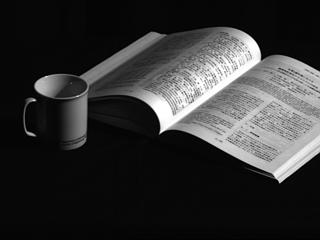

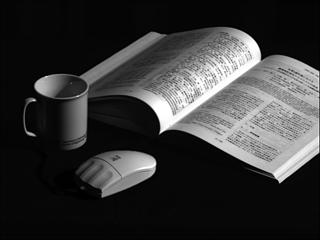

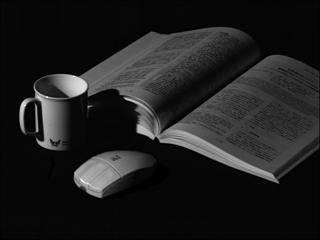

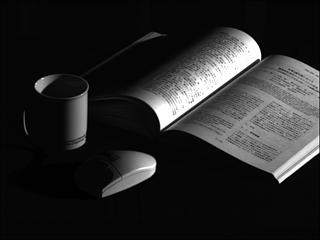

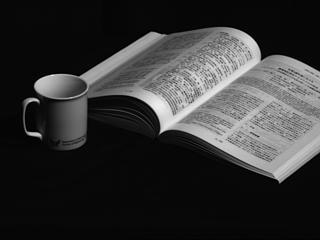

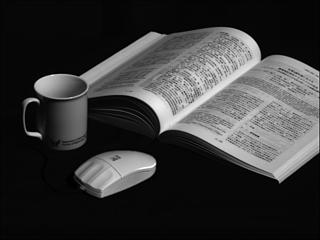

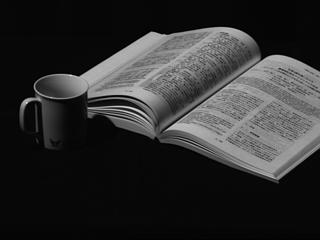

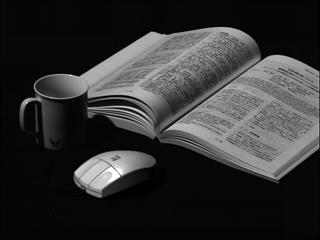

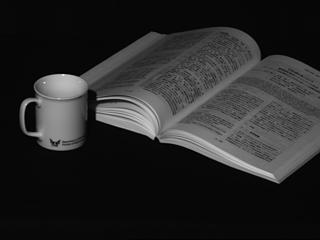

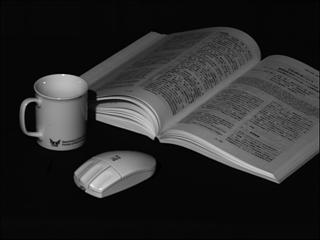

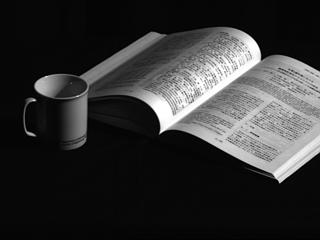

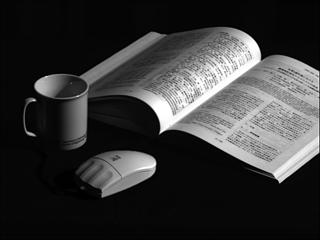

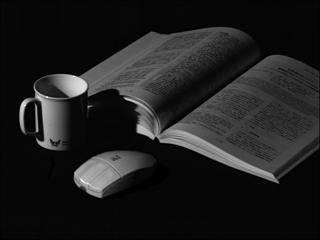

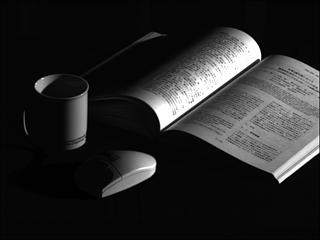

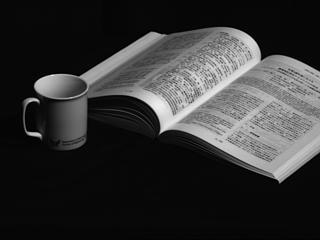

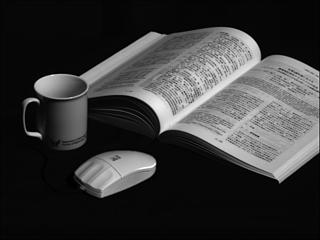

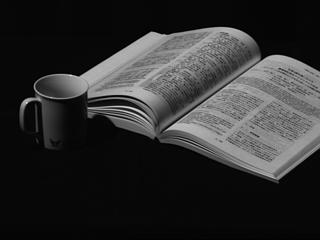

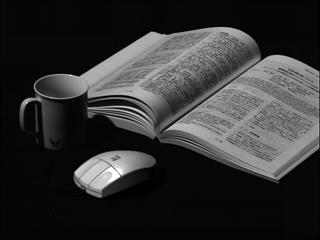

Next, we show some results of mixing virtual objects and real scenes

with keeping the photometric consistency. The left images are real

scenes in which the virtual object is to be mixed. The virtual object

is synthesized by the PIBR to have the same lighting direction as the

real scene. The right images are the mixed images, a mouse is a

virtual object while a cup and a book are real ones. Since the

photometric property is consistent between the real scene and the

virtual object, the mixed image looks realistic.